Cloud computing is all about running a service or a server using a pool of computers. The computers could be your own or you could lease time on a commercial cloud.

Cloud computing is all about running a service or a server using a pool of computers. The computers could be your own or you could lease time on a commercial cloud.Who's Who

Some of the big names in this space are Amazon, Google, GoGrid and ElasticHosts. Apart from Google, they allow you to run a complete operating system and whatever other software you like on their infrastructure - which is why it is called Infrastructure as a Service (IaaS). Google's is a bit different, it is a Platform as a Service (PaaS). Google also offers applications like Google Docs (word processor, spreadsheet, etc.) which is known as Software as a Service (SaaS).

Infrastructure as a Service

Infrastructure as a Service interests me at the moment. It promises to disrupt current practice. No longer does a company need to buy servers for their business. They can lease time from one or more providers without having to outlay any capital. Nor do they need to maintain or upgrade any hardware.

How does it work?

An example may help.

Your IT department wants to upgrade one third of your servers. A normal request that you might get each year. Instead of investing in new machines they suggest that the company leases time on ElasticHosts (EH) server cloud and on Amazon's AC2 as a backup site.Private Clouds

The IT people would setup accounts, request a certain number of virtual servers and copy the disks of the current servers to EH and AC2. Each virtual server would be configured to have the necessary number of CPUs, RAM and network bandwidth. The IT department would then administer the servers from your offices just as if they are real servers: They can start them, pause them and stop them just like a real server.

But, they can also upgrade them in an instant. And, they tell you, it costs 25c per hour for 1GHz CPU, 1GB RAM, 1000GB disk and 100GB network traffic - $180/month. But they say that at night they can turn half the servers off so it would cost about $120/month on average. They can do the same on weekends and on public holidays too.

And if the company had a busy period, they could order more servers or upgrade the existing ones almost instantly and afterwards they would downgrade them.

It sounds like magic. But these clouds can also be established using your existing infrastructure and if you need additional capacity you can lease it by the hour.

Making a Virtual Server

I wondered how hard it might be to make a disk image to run on a cloud. It turns out to be rather easy.

I have a Ubuntu linux virtual machine running in VMWare Fusion. It allows me to run linux on my Mac and it works well (I should mention Sun's VirtualBox here as well which does the same job and is free and Open Source).

Ubuntu provide a program to make the creation of virtual machines an amost trivial task.

sudo ubuntu-vm-builder kvm jaunty

'sudo' allows the program to run as an administrator.

'kvm' specifies that I want a virtual machine that will run on a kvm based cloud such as ElasticHosts. I could have used EC2 to make an image that would run on Amazon's EC2.

'jaunty' specifies the version of Ubuntu server that I wanted it to be.

(I used some additional command line options that are not needed.)

A little while later, I had a directory containing a disk image and the command necessary to run it. With some other configuration changes it it possible to create a VM in a few minutes.

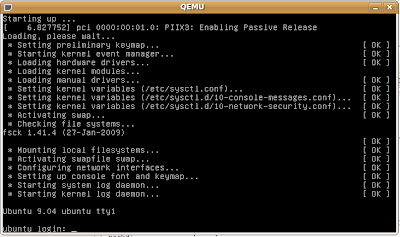

Since my Ubuntu machine is itself a virtual machine running on a Mac I did not think that I could test the new VM. But I thought that I would try to run it to see what it might do. It began by complaining that it could find KVM support and then ran the VM in an emulator (QEMU).

The machine booted like a real PC and eventually gave me a login prompt.

Appliances

Some companies are now offering their applications or operating systems as a VM to download - ready to go. VMWare has a large selection of pre-build VMs.

The Future

It looks interesting. Some other work in this area focuses on standardising the management interface for a cloud of VMs, standardising the VMs so that any VM can run on and public or private cloud and schedulers so that an administrator can prioritise VMs and schedule the start-up and shutdown of any VM. Read more about OpenNebula and Haizea.