In case you missed the news, Google has

announced that it will build a secure operating system for, well, any device: desktops, netbooks, tablets, phones.

Basically, it is

"Google Chrome running within a new windowing system on top of a Linux kernel"

and for security they are

"completely redesigning the underlying security architecture of the OS so that users don't have to deal with viruses, malware and security updates."

As you might imagine, this has stirred quite some commentary from magazines and bloggers:

gizmodo,

techcrunch and

Wikipedia always has something to offer.

But I think most have missed the other key ingredients - everyone but

ToxProX at least.

Google is not just working on a new OS. It is also working on at least two other related projects: Native Client and O3D.

O3DI'll cover O3D first since I don't have much to write. The web site suggest that this stands for 'Open web standard for 3D graphics'.

It is a browser plugin that allows web developers to add 3D graphics to their application. I think the short video

demo describes it best.

Basically, GPU accelerated 3D graphics for your browser.

Native ClientNative Client also known as NaCl (but never as Salt) is a way to run 'normal' programs safely.

A normal program is a word processor; a game; a photo editor; a VoIP client; a movie maker; or a 3D earth browser. Most of the software you use are normal programs and they are usually compiled to machine code for your CPU type and for your operating system.

What is Native?A native program runs on the CPU and not in another program that decodes the instructions and then performs the operation.

That's not very clear so I will try a metaphor:

It is like reading a book. A book written in English is easily read by someone who understands or natively speaks English. Give them a book in German, and a German-English dictionary, and they could also read the book - but much slower.

For each word that they have not seen before, they would have to look up the word in the German-English dictionary, read the English meaning and then decode the meaning of the German sentence. They would have to do this for every sentence.

Initially they would be slow, but as their German vocabulary grows their sentence translation speed would improve because they are optimising the process of looking up the English meaning of a German word by memorizing it. But they will never be as fast as a native speaker since they are always translating.

Many programs written in Java, JavaScript, Python, Ruby, C#, lisp, perl and flash work this way. The program is written in a non-native language and another piece of software does the translation. Fast CPUs and cleaver optimizing techniques allow them to run quickly, but a native program that did the same thing would run at least twice as fast, but more often at least 10 times faster.

(A side note here is that CPUs are not getting that much faster any more which is why all language developers are working on ways to make their interpreter work faster or getting their compiler to generate faster and often smaller code.)

So, why aren't all programs written to run natively? The answer is portability. Interpreted programs can generally run on any operating system and on any CPU. Speed is traded for portability. It also means that we often loose the benefits of hardware accelerated graphics.

Native Client CPU SupportMost home and business computers use just 2 types of CPUs:

x86-based and

ARM based.

Intel, AMD and some other manufacturers make x86 CPUs which are generally used in servers, desktops and more recently netbook computers.

ARM licenses their designs to many manufacturers which integrate various modules and produce very low-powered chips for use in mobile devices such as ipods and mobile phones.

NaCl is being built for these two CPU architectures. This doesn't prevent future support for other CPUs like IBM's PowerPC or Sun's Sparc or Sony's Cell processor.

Native Client SecurityBack to Native Client. This could be part of web 3.0: All applications loaded from the web, running securely in the browser at native application speeds? Maybe web 2.5?

NaCl tackled security in a new way. The programs are run in a

sandbox. The sandbox disassembles the program, enforces memory access rules, rejects code that doesn't obey strict rules to prevent it jumping out of the sandbox, and only allows interaction with the real world through the limited API back to the browser or perhaps the ChromeOS.

This solution has some interesting benefits:

- There is no need to enforce a trusted development chain so developers don't need a special, trusted compiler and developer certificate. Signed applications are unnecessary.

- Buggy code can not crash the OS and nor can it do any damage since it is running in a sandbox which does not have access to hardware or the OS.

- Malware can't make use of bugs to gain privileged access to the OS and the sandbox ensures all code stays in the sand. So, malware can not spread itself, access any file on the OS or leave the sandbox (or is it a salt box?). Could this be the end of Malware as we know it? I think Google thinks so.

Write Once, Run AnywhereAn unrealized dream of software architects is to write a program once, and to be able to run it on anything. Native Client makes this a reality. It will be a new program format that allows an application to run in any web browser (with a NaCL plugin I guess) on any operating system and on any hardware.

It also means that software will no longer need to be installed.

Enter Google GearsGoogle

Gears allows you to run your Google applications while off-line. It provides a database for local caching of data, HTML, images, JavaScript and perhaps NaCl programs as well.

Once you are back on the net, your locally generated data will synchronise with your on-line data.

Say someone creates a game for Native Client. You would agree to the license (if any), make any payment and run the game. The game would be cached locally so you won't need to download it each time you want to play, and this cache will allow you to use it when your computer is not connected to the web.

Now if the developer fixes a bug, or adds a new feature, the browser will check to see if the cached version is up-to-date. If not, it will automatically download and cache the latest version.

If this is how it might work, Google have just disrupted the whole universe of content distribution. There is no need for software installation. No need for update services. No need for fancy package management like Debian's APT. It will be like a universal version of iPhone applications.

So you can look forward to Google Earth, Picasa and Google Office applications all running from the web without having to install them and keep them up-to-date.

So, if programs run fast, run anywhere, are secure and don't crash the browser or OS, why wouldn't you use it for other parts of an operating system? Did I mention that NaCl also supports POSIX threading and IO? Now it just needs a hardware layer, device drivers and a GUI.

We already know that ChromeOS will be based on Linux so just the GUI remains to be built. Or does it?

Chrome is the GUIWhat if Chrome (the browser) IS the GUI? It already has a window manager - we call them tabs. They can be pulled-out of the browser, minimised and resized - just like a window manager.

It already has a scripting language - JavaScript, and Chrome's V8 engine is fast.

It already supports HTML5 which covers-off video, audio and virtually everything that Adobe's Flash does.

And, it is getting hardware accelerated 3D graphics in the form of O3D.

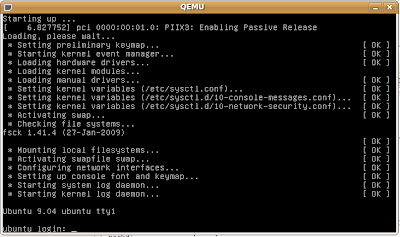

A version of the

Chrome browser was released for the Chrome OS recently. It was a deb package so it is designed for, most probably, Ubuntu. It is not much different from the standard Chrome browser, but it does have a clock display, battery indicator and a hint of network settings.

My guess - there is no window manager apart from the Chrome browser.

In SummaryNative Client promises much:

- Any compiler can be modified to produce NaCl code.

- The compiler will make a program that will run on ARM and x86 (for now) CPUs.

- The program will run in a sandbox that restricts what it can do.

- Buggy programs can do no damage.

- Malicious programs like viruses and worms can do no damage, nor can they spread or modify files on your OS.

- May be enabling technology for ChromeOS